…and his goons at OSHA:

Author Archives: Scott Alfter

Might need to pick up an animal-style Double-Double this evening

In-N-Out says “no” to medical apartheid:

Who does he think he’s fooling?

They really do think we’re a bunch of idiots. One might consider that as a massive case of projection:

Flip-flopping

Using Duplicity to back up to Linode Object Storage

I migrated my home server from Gentoo Linux to Flatcar Container Linux a little while back, but hadn’t yet migrated the document backups the old server was making. I was using Duplicity to back up documents to the free space within a Linode VPS, but an increase in the size of the backup set was causing the VPS to run out of disk space. With the new server, I decided to try moving the backups from the VPS to Object Storage. (This is an S3-compatible system, so you can probably adapt these instructions to other S3-compatible storage systems…or to Amazon S3 itself. In figuring this out, I started with example configurations for other S3-compatible services and tweaked where necessary.)

I’m using the wernight/duplicity container to back up files. It needs to be provided two persistent volumes, one for your GnuPG keyring and another for cache. The data to be backed up should also be provided to it as a volume. Access credentials for Linode Object Storage should be stored in an environment file.

To make working with containerized Duplicity more like Duplicity on a non-containerized system, you might wrap it in a shell script like this:

#!/usr/bin/env bash

docker run -it --rm --user 1000 --env-file /mnt/ssd/container-state/duplicity/env -v /mnt/ssd/container-state/duplicity/gnupg:/home/duplicity/.gnupg -v /mnt/ssd/container-state/duplicity/cache:/home/duplicity/.cache/duplicity -v /mnt/storage/files:/mnt/storage/files wernight/duplicity $*(BTW, if you’re not running a container system, you can invoke an installed copy of Duplicity with the same options taken by this script.)

My server hosts only my files, so running Duplicity with my uid is sufficient. On a multi-user system, you might want to use root (uid 0) instead. Your GnuPG keyring gets mapped to /home/duplicity/.gnupg and persistent cache gets mapped to /home/duplicity/.cache/duplicity. /mnt/storage/files is where the files I want to back up are located.

The zeroth thing you should do, if you don’t already have one, is create a GnuPG key. You can do that here with the script above, which I named duplicity on my server:

./duplicity gpg --generate-key

./duplicity gpg --export-secret-keys -aFIrst thing you need to do (if you didn’t just create your key above) is to store your GnuPG (or PGP) key with Duplicity. Your backups will be encrypted with it:

./duplicity gpg --import /mnt/storage/files/documents/my_key.ascOnce it’s imported, you should probably set the trust on it to “ultimate,” since it’s your key:

./duplicity gpg --update-trustdbOne last thing we need is your key’s ID…or, more specifically, the last 8 digits of it, as this is how you tell Duplicity what key to use. Take a look at the output of this command:

./duplicity gpg --list-secret-keysOne of these (possibly the only one in there) will be the key you created or imported. It has a 32-digit hexadecimal ID. We need just the last 8 digits. For mine, it’s 92C7689C.

Next, pull up the Linode Manager in your browser and select Object Storage from the menu on the left. Click “Create Bucket” and follow the prompts; make note of the name and the URL associated with the bucket, as we’ll need those later. Switch to the access keys tab and click “Create Access Key.” Give it a label, click “Submit,” and make note of both the access key and secret key that are provided.

Your GPG key’s passphrase and Object Storage access key should be stored in an environment file. Mine’s named /mnt/ssd/container-state/duplicity/env and contains the following:

PASSPHRASE=<gpg-key-passphrase>

AWS_ACCESS_KEY_ID=<object-storage-access-key>

AWS_SECRET_ACCESS_KEY=<object-storage-secret-key>Now we can run a full backup. Running Duplicity on Linode Object Storage (and maybe other S3-compatible storage) requires the –allow-source-mismatch and –s3-use-new-style options:

./duplicity duplicity --allow-source-mismatch --s3-use-new-style --asynchronous-upload --encrypt-key 92C7689C full /mnt/storage/files/documents s3://us-southeast-1.linodeobjects.com/document-backup“full” specifies a full backup; replace with “incr” for an incremental backup. Linode normally provides the URL with the bucket name as a subdomain of the server instead of as part of the path; you need to rewrite it from https://bucket-name.server-addr to s3://server-addr/bucket-name.

After sending a full backup, you probably want to delete the previous full backups:

./duplicity duplicity --allow-source-mismatch --s3-use-new-style --asynchronous-upload --encrypt-key 92C7689C --force remove-all-but-n-full 1 s3://us-southeast-1.linodeobjects.com/document-backupYou can make sure your backups are good:

./duplicity duplicity --allow-source-mismatch --s3-use-new-style --asynchronous-upload --encrypt-key 92C7689C verify s3://us-southeast-1.linodeobjects.com/document-backup /mnt/storage/files/documentsChange “verify” to “restore” and you can get your files back if you’ve lost them. Note that restore won’t overwrite a file that exists, so a full restore should be into an empty directory.

Setting up cronjobs (or an equivalent) to do these operations on a regular basis is left as an exercise for the reader. :)

Installing Flatcar Container Linux on Linode

I didn’t see much out there that describes how to set up a Flatcar Container Linux VM at Linode. What follows is what I came up with; it’ll put up a basic system that’s accessible via SSH.

You’ll need a Linux system to prep the installation, as well as about 12 GB of free space. The QEMU-compatible image that is available for download is in qcow2 format; Linode needs a raw-format image. You can download and convert the most recent image as follows (you’ll need QEMU installed, however your distro provides for that):

wget https://stable.release.flatcar-linux.net/amd64-usr/current/flatcar_production_qemu_image.img.bz2 && bunzip2 flatcar_production_qemu_image.img.bz2 && qemu-img convert -f qcow2 -O raw flatcar_production_qemu_image.img tmp.img && mv tmp.img flatcar_production_qemu_image.img && bzip2 -9 flatcar_production_qemu_image.imgYou also need a couple of installation tools for Flatcar Container Linux: the installation script and the configuration transpiler. The most recent script is available on GitHub:

wget https://raw.githubusercontent.com/flatcar-linux/init/flatcar-master/bin/flatcar-install -O flatcar-install.sh && chmod +x flatcar-install.shSo’s the transpiler…you can grab the binary from the releases page. You’ll want to grab the latest ct-v*-x86_64-unknown-linux-gnu and rename it to ct.

Next, we need a configuration script. Here’s a basic YAML file that enables SSH login. You’ll want to substitute your own username and SSH public key for mine. Setting the hostname and timezone to appropriate values for you might also be a good idea. Save this as config.yaml:

passwd:

users:

- name: salfter

groups:

- sudo

- wheel

- docker # or else Docker won't work

ssh_authorized_keys:

- ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIBJQgbySEtaT8SqZ37tT7S4Z/gZeGH+V5vGZ9i9ELpmU salfter@janeway

etcd:

# go to https://discovery.etcd.io/new to get a new ID

discovery: https://discovery.etcd.io/c4e7dd71d5a3eae58b6b5eb45fcba490

storage:

disks:

- device: /dev/sda

- device: /dev/sdb

filesystems:

- name: "storage"

mount:

device: "/dev/sdb"

format: "ext4"

label: "storage"

# wipe_filesystem: true

directories:

- filesystem: "storage"

path: "/docker"

mode: 0755

- filesystem: "storage"

path: "/containerd"

mode: 0755

- filesystem: "storage"

path: "/container-state"

mode: 0755

files:

# set hostname

- path: /etc/hostname

filesystem: root

mode: 0644

contents:

inline: |

flatcar

# /etc/resolv.conf needs to be a file, not a symlink

- path: /etc/resolv.conf

filesystem: root

mode: 0644

contents:

inline: |

links:

# set timezone

- path: /etc/localtime

filesystem: root

overwrite: true

target: /usr/share/zoneinfo/US/Pacific

systemd:

units:

# mount the spinning rust

- name: mnt-storage.mount

enabled: true

contents: |

[Unit]

Before=local-fs.target

[Mount]

What=/dev/disk/by-label/storage

Where=/mnt/storage

Type=ext4

[Install]

WantedBy=local-fs.target

# store containers on spinning rust

- name: var-lib-docker.mount

enabled: true

contents: |

[Unit]

Before=local-fs.target

[Mount]

What=/mnt/storage/docker

Where=/var/lib/docker

Type=none

Options=bind

[Install]

WantedBy=local-fs.target

- name: var-lib-containerd.mount

enabled: true

contents: |

[Unit]

Before=local-fs.target

[Mount]

What=/mnt/storage/containerd

Where=/var/lib/containerd

Type=none

Options=bind

[Install]

WantedBy=local-fs.target

# Ensure docker starts automatically instead of being socket-activated

- name: docker.socket

enabled: false

- name: docker.service

enabled: true

docker:

Use ct to compile config.yaml to config.json, which is what the Flatcar installer will use:

./ct --in-file config.yaml >config.jsonNow it’s time to set up a new Linode. Get whatever size you want. Set it up with any available distro; we’re not going to use it. (I initially intended to leave a small Alpine Linux configuration up to bootstrap and update the system, but you really don’t need it. Uploading and installation can be done with the Finnix rescue system Linode provides.) Shut down the new host, delete both the root and swap filesystems disks that Linode creates, and create two new ones: a 10-GB Flatcar boot disk and a data disk that uses the rest of your available space. Configure the boot disk as /dev/sda and the data disk as /dev/sdb.

Reboot the node in rescue mode. This will (as of this writing, at least) bring it up in Finnix from a CD image. Launch the Lish console and enable SSH login:

passwd root && systemctl start sshd && ifconfigMount the storage partition so we can upload to it:

mkdir /mnt/storage && mount /dev/sdb /mnt/storage && mkdir /mnt/storage/installMake note of the node’s IP address; you’ll need it. I’ll use 12.34.56.78 as an example below.

Back on your computer, upload the needed files to the node…it’ll take a few minutes:

scp config.json flatcar-install.sh flatcar_production_qemu_image.img.bz2 root@12.34.56.78:/mnt/storage/install/Back at the node, begin the installation…it’ll take a few more minutes:

./flatcar-install.sh -f flatcar_production_qemu_image.img.bz2 -d /dev/sda -i config.jsonOnce the installation is complete, shut down the node. In the Linode Manager page for your node, go to the Configurations tab and edit the configuration. For “Select a Kernel” under “Boot Settings,” change from the default “GRUB 2” to “Direct Disk.” /dev/sda is a hard-drive image, not a filesystem image; this will tell Linode to run the MBR on /dev/sda, which will start the provided GRUB and load the kernel.

Now, you can bring up your new Flatcar node. If you still have the console window up, you should see that it doesn’t take long at all to boot…maybe 15 seconds or so once it gets going. Once it’s up, you can SSH in with the key you configured.

From here, you can reconfigure more or less like any other Flatcar installation. If you need to redo the configuration, probably the easiest way to do that is to upload your config.json to /usr/share/oem/config.ign, touch /boot/flatcar/first_boot, and reboot. This will reread the configuration, which is useful for adding new containers, creating a persistent SSH configuration, etc.

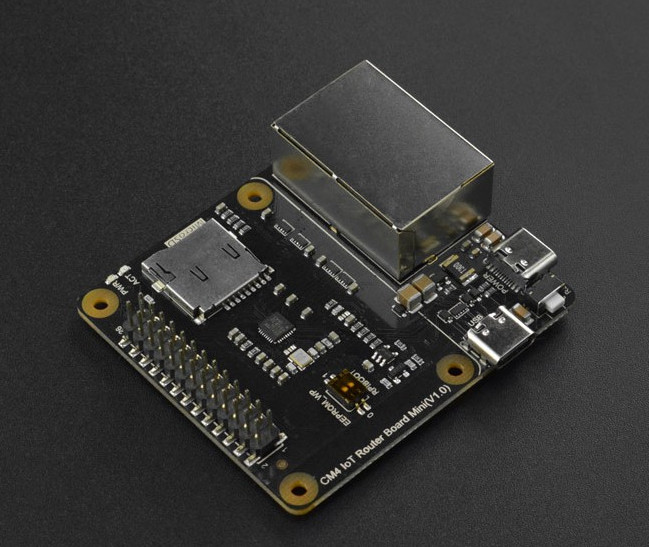

RPi CM4 + DFRobot IoT Router Carrier Board Mini + OpenWRT 21.02: getting it working

A little while back, I ran across this page about a couple of boards that turn a Raspberry Pi Compute Module 4 into a router. I’ve been running an Asus RT-AC56U for a while now and had been mulling over some upgrade options, and this sounded like a decent way to get OpenWRT back on my network. (I’d previously used it years ago on a Linksys WRT54GL, the OG router for which OpenWRT was originally developed, but never got around to running it on the newer router because there’s no support for 802.11ac on its Broadcom WiFi hardware.)

Of the two options presented, I picked the DFRobot IoT Router Carrier Board Mini because its second Ethernet port is connected directly to the CM4’s PCI Express port. (The other option inserted a USB 3.0 interface in between the two.) Nobody on this side of the pond had these in stock, but The Pi Hut had them available and shipping from the UK to the US didn’t take too long. (As I write this, they’re out of stock on all varieties of the Compute Module 4, so you’ll need to look around a bit to see if anybody has them at a not ridiculously marked-up price.)

I wanted to put stock OpenWRT on it, but the image they provide is missing the Realtek 8169 modules needed to get the second Ethernet port on the carrier board working. DFRobot provides an image with the needed modules already installed, but it’s of a 21.02 prelease. I tried upgrading it to 21.02 final, but it wouldn’t accept it.

Through a bit of trial and error, I figured out the modules I needed to add to the stock image. They could be put on there while the stack was connected to my computer to flash the firmware. Once that was done, I extracted the OpenWRT configuration from the DFRobot image and copied it over as well. With all of that in place, it now boots up with the LAN interface on the PCIe Realtek interface (the one closer to the USB-C power connector) and the WAN interface on the CM4’s Broadcom interface (the other one). Getting it working was something like what follows. (Note: I bought a CM4 with onboard eMMC; the instructions reflect this. If you bought one without, you’ll need to write the firmware to a MicroSD card, which might actually be a bit easier to set up in hindsight. I wanted fewer parts, though, and I think eMMC is supposed to be a little bit faster. Also, these instructions assume you’re using Linux. Mac OS X might be slightly different. Windows will probably be considerably different, and an attempt at accessing the eMMC from Windows 10 according to DFRobot’s instructions failed.)

- Plug the CM4 into the carrier board. Be careful and make sure the two 100-pin connectors are properly aligned.

- Download the OpenWRT 21.02 release image and decompress it.

- Check out the Raspberry Pi usbboot tool and build it:

git clone --depth=1 https://github.com/raspberrypi/usbboot && cd usbboot && make

(note: you need libusb to build and use this) - Peel the protective tape off of the switch block and use a small pointy object to flip the “USBBOOT” switch on (toward the MicroSD slot).

- Plug a USB A-to-C cable into the carrier’s port labeled “USB.” Plug a USB-C power supply into the port labeled “POWER.”

- Bring up the CM4 as a storage device:

sudo ./rpiboot. Determine which device node it grabbed (dmesgis good for this); in my case, it was /dev/sda. - Flash the OpenWRT image:

dd if=openwrt-21.02.0-bcm27xx-bcm2711-rpi-4-ext4-factory.img of=/dev/sda bs=512 status=progress - Resize /dev/sda2 to take up the rest of the storage space;

gpartedis a good tool for this. - Mount /dev/sda2 someplace (I used /mnt/usbdisk as it was already there and not in use) and create a new directory:

sudo mkdir /mnt/usbdisk/ipks - Download these OpenWRT packages to get the Realtek 8169 working: r8169-firmware kmod-mii kmod-libphy kmod-phy-realtek kmod-r8169. Save them to /mnt/usbdisk/ipks.

- Download and uncompress the DFRobot prerelease image; we need to extract its config files.

- We need to find the start of the root partition within the image, so

sudo gparted openwrt-bcm27xx-bcm2711-rpi-4-ext4-factory.img, right-click on the second partition, and click Information. Scroll up in the window that pops up to get the location of the first sector; for the image I downloaded, it was 147456. Multiplying this by 512 (the block size in bytes), we get 75497472; keep this number in mind. Close gparted. - Mount the root partition and copy the config files over to the CM4:

sudo mount -o loop,ro,offset=75497472 openwrt-bcm27xx-bcm2711-rpi-4-ext4-factory.img /mnt/tmp && sudo cp /mnt/tmp/etc/config/* /mnt/usbdisk/etc/config/ sudo umount /mnt/usbdisk && sudo umount /mnt/tmp, and unplug the cables from the carrier board.- Plug a USB-to-TTL-serial cable into the carrier board’s GPIO header: black to pin 6, white to pin 8, green to pin 10. Leave the red wire disconnected. Plug the USB end into your computer and note which device node it grabs (probably /dev/ttyUSB0). Fire up your favorite comm program (Minicom, perhaps?) on that port at 115.2 kbps:

minicom -D /dev/ttyUSB0 -b 115200 - Plug the USB-C power supply back into the “POWER” connector. Watch the boot messages scroll by for a few seconds, and press Enter to get a root prompt in OpenWRT.

- Install the modules we stored in /ipks:

cd /ipks && for i in r8169-firmware_20201118-3_aarch64_cortex-a72.ipk kmod-libphy_5.4.143-1_aarch64_cortex-a72.ipk kmod-phy-realtek_5.4.143-1_aarch64_cortex-a72.ipk kmod-mii_5.4.143-1_aarch64_cortex-a72.ipk r8169-firmware_20201118-3_aarch64_cortex-a72.ipk; do opkg install $i; done - Shut down OpenWRT:

poweroff. Wait for the green LED to quit blinking. Unplug the power and flip the USBBOOT switch back to “off” (away from the MicroSD slot). - Disconnect the serial cable and run a network cable from the LAN port (the one closer to the power connector) to your computer. Plug the power back in, wait a few seconds for it to boot, and go to https://192.168.1.1; it should bring up OpenWRT’s LuCI interface. You’re done! Configure OpenWRT to your liking, then move it closer to your network gear and run cables from the LAN port to an empty switch port and from the WAN port to whatever provides your Internet (cable modem, DSL modem, or whatever).

All that’s left now is to put it in a case (either 3D-printed, or maybe just the laser-cut case from DFRobot) and add a wireless access point. I’ve had good luck with a bunch of Cisco WAP150s at work, but I’m open to suggestions. (I could’ve gotten one of the WiFi-equipped CM4s, but my understanding is that since they’re connected over SPI to the CM4’s SoC, their speed is not so good.)

A Venn diagram, for your edification

More timely than ever

It’s like he had his finger on the pulse of The Current Year. How is this not the perfect description of our predicament?

The urge to save humanity is almost always a false front for the urge to rule.

H.L. Mencken