This pulls together tips from https://christitus.com/windows-11-perfect-install/, https://christitus.com/install-windows-the-arch-linux-way/, https://blogs.oracle.com/virtualization/post/install-microsoft-windows-11-on-virtualbox, and some other sources I’ve forgotten. It’s mainly aimed at getting Win11 running under QEMU on Gentoo Linux, but should also work for bare-metal installs, QEMU on other platforms, or other virtualization platforms (VMware, VirtualBox, etc.).

- Verify kernel prerequisites as described in https://wiki.gentoo.org/wiki/QEMU

- Install

app-emulation/qemuandapp-emulation/libvirt - Start /etc/init.d/libvirtd, and add it to the default runlevel:

sudo rc-update add libvirtd - Add yourself to the kvm group:

sudo usermod -aG kvm `whoami` - Download the latest Win11 ISO from https://www.microsoft.com/software-download/windows11

- Download the latest libvirt driver ISO from https://github.com/virtio-win/virtio-win-pkg-scripts/

- Start virt-manager and create a new VM, installing from the Win11 ISO. Most defaults are OK, with the following exceptions:

a. Change virtual disk storage bus from SATA to virtio

b. Add new storage, select the driver ISO, and change type from disk to CD-ROM

c. Change network device type from e100e to virtio - Start the VM; Win11 setup should begin.

- Disable TPM and Secure Boot checks in the installer:

a. When the installer begins, press Shift-F10 and launchregeditfrom the command prompt.

b. Add a new key namedLabConfigunderHKLM\SYSTEM\Setup

c. Add two new DWORDS toHKLM\SYSTEM\Setup\LabConfignamedBypassTPMCheckandBypassSecureBootCheck, and set both to 1.

d. Exit Regedit, close the command prompt, and continue. - Optional: when prompted during installation, select “English (World)” as the time and currency format. This causes a bunch of bloatware and other crap to not be installed.

- After installation on the first boot, press Shift-F10 again and enter this to cut OOBE short:

oobe\BypassNRO

This will trigger a reboot, followed by a less intrusive offline OOBE process (necessary because the virtual NIC isn’t working yet, and it’s a good idea anyway). - Once the system’s up and running, run

virtio-win-guest-tools.exefrom the driver ISO to install the remaining needed drivers and other tools. - If you selected “English (World)” as the time and currency format when installing, start

intl.cpland make sure all settings are as they should be (whether “English (United States)” or whatever’s appropriate for you). Do the same for “region settings” in the Settings app. - Open Microsoft Store, search for “App Installer,” and update it…need to do this for Winget to work. (TODO: is there a way to do this with a normal download instead?)

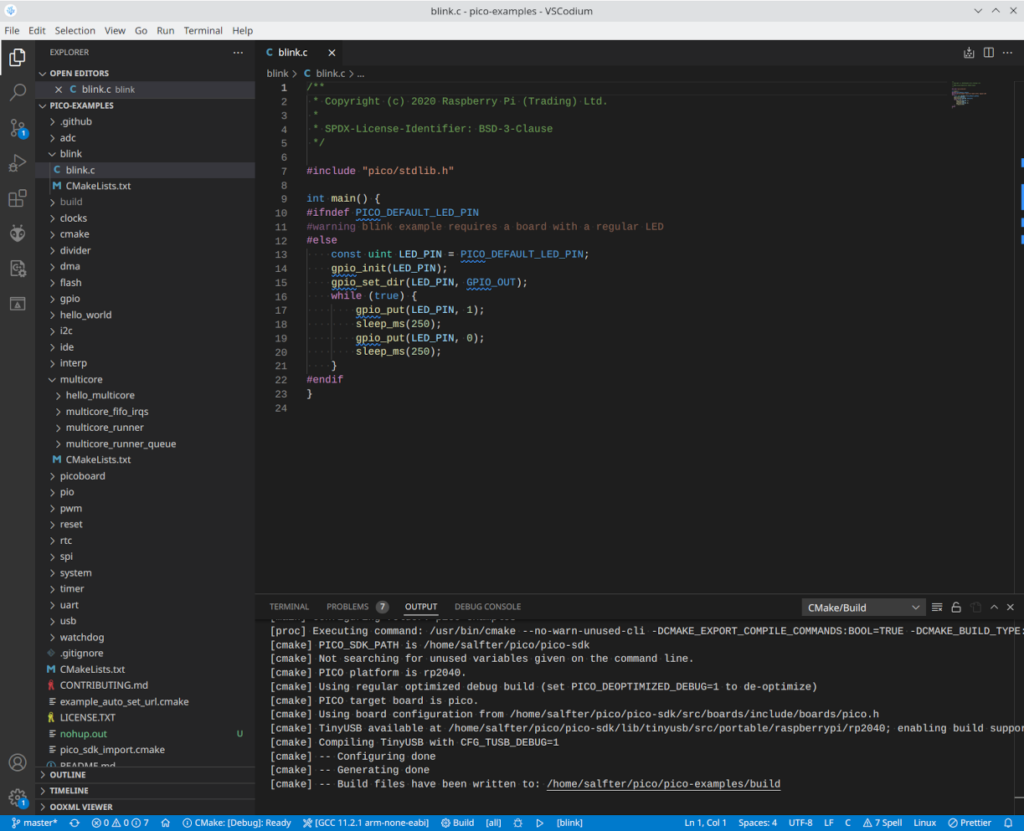

- Open a PowerShell admin window and launch Chris Titus’s tweak utility:

irm christitus.com/win | iex

Use it to debloat your system, install the software you want, etc.

Warning: Removing the Edge browser with this utility may break other apps (I know for certain that Teams won’t work), and it might not be possible to get it working right again without a full reinstall. It appears that Edge is embeddable within applications in much the same way that Internet Explorer was once embeddable. Plus ça change… - Check for Windows updates in the usual manner.

More Win11-without-TPM/Secure Boot tricks

https://nerdschalk.com/install-windows-11-without-tpm/ has several methods that might be useful, especially for upgrading from Win10 or earlier (as opposed to the clean install described above).

For upgrading to Win11 22H2 from an earlier version, https://jensd.be/1860/windows/upgrade-to-windows-11-22h2-on-unsupported-hardware should be useful.